I get this question almost every week.

A blogger emails me in a panic. A client forwards a screenshot. A red bar. “82% AI-generated.”

And the message underneath always sounds the same:

- “Will this get my site rejected from AdSense?”

- “Is Google going to punish me?”

- “Do I need to rewrite everything?”

I’ve been testing AI content detectors hands-on since they first showed up, and in 2026, the confusion is worse than ever. Not because the tools improved—but because bloggers trust them too much. Let’s talk honestly. I’ll show you what I tested, what broke, and what actually matters if you care about rankings, AdSense approval, and your sanity.

Why AI Content Detectors Exist in the First Place

AI detectors didn’t appear because Google asked for them. They appeared because publishers panicked.

The moment everything changed

When AI writing tools became mainstream, two things happened fast:

- Content production exploded.

- Editors lost confidence in spotting automation.

So detectors stepped in, promising a simple answer to a messy question: “Was this written by a human?” Sounds reasonable. But here’s the problem.

What detectors claim to measure

Most AI content detectors in 2026 (like Originality.ai or Winston AI) analyze:

- Sentence predictability (Perplexity): How “random” is the word choice?

- Word probability patterns: Does the text follow the statistical paths of a Large Language Model?

- Structural smoothness (Burstiness): Is the sentence length too uniform?

They present this as certainty. It isn’t. At best, they give a probability guess. At worst, they manufacture confidence where none exists.

I Tested AI Content Detectors in 2026 — Here’s What Actually Happened

I didn’t rely on demos or marketing pages. I tested these tools the same way real bloggers work.

My real-world test setup

I ran three types of articles through multiple detectors:

- A fully human-written blog post: Written from scratch, no AI assistance, personal examples included.

- An AI-assisted article: I used AI for structure, then rewrote everything manually—tone, pacing, examples.

- Raw AI output: Minimal editing. Exactly what lazy publishers submit.

The results were… uncomfortable

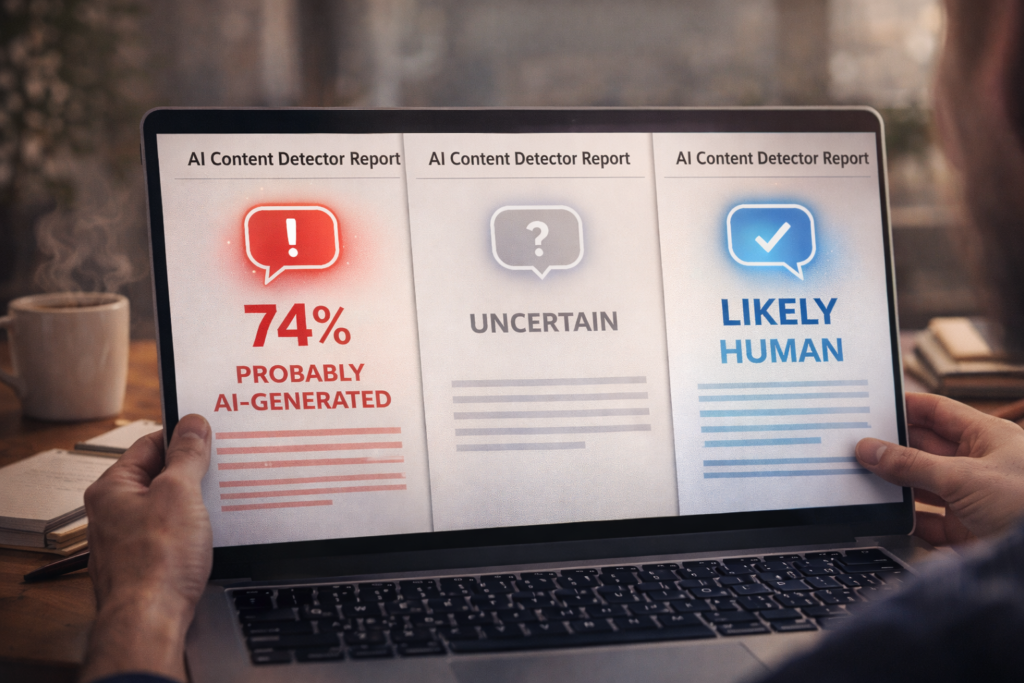

- One detector flagged my human article as 74% AI.

- Another rated the edited article as “Likely Human.”

- A third tool marked all three as “uncertain.”

The kicker? Two tools gave opposite scores for the exact same text. The 2026 Reality: These tools aren’t detecting authorship; they are reacting to patterns. If you are a clear, structured writer, these tools will often flag you simply because you write “too well.”

How AI Content Detectors Actually Work (Without the Tech Jargon)

What detectors are good at:

They spot repetitive sentence rhythm, overly smooth transitions, and “safe” phrasing. Raw AI content triggers all of this because AI is designed to be “average.”

What detectors completely miss:

They cannot understand lived experience, original opinions, or intent.

When I wrote about a personal AdSense rejection I dealt with years ago, one detector still flagged it as “AI-like” because the writing was clear and confident. Apparently, sounding competent is suspicious now.

Comparison: Popular AI Content Detectors in 2026

| Detector Type | What It Claims | Accuracy in Practice | Biggest Issue |

| Probability-based | AI likelihood score | Low | High False Positives |

| Pattern analysis | Sentence structure | Moderate | Flags good human writing |

| 2026 Hybrid models | Multiple signals | Inconsistent | Contradictory results |

The Biggest Myths Bloggers Still Believe About AI Detectors

- “Google uses AI detectors to penalize content”: No. Google’s SpamBrain system and helpful content algorithms look for value, not the method of production. Google has officially stated that AI content is fine as long as it meets E-E-A-T standards.

- “AI detection equals bad SEO”: I’ve seen AI-assisted articles rank page one while human-written content sank. SEO rewards outcomes (time on page, clicks, utility), not purity tests.

Why AI Detectors Fail on High-Quality Blog Content

- Human editing breaks detector logic: Once you add personal phrasing and imperfect sentence flow, the tool panics because the text is neither “perfect AI” nor “purely random.”

- Expertise looks like automation: When you write confidently about a niche—SEO, finance, tech—the clarity often resembles AI patterns. Detectors mistake expertise for automation.

🟦 Pro-Tip: What I Do When a Detector Flags My Content

Don’t rewrite the article blindly. Instead:

- Add one personal anecdote (“Last year, I tried this and…”)

- Insert a clear opinion you’d defend publicly.

- Reference a specific mistake you’ve made.

- Break one paragraph into a single, punchy sentence.

Real writing is messy. By adding these “human glitches,” your score will often drop naturally.

What Actually Matters for Google AdSense Approval in 2026

If AdSense is your fear, focus on these three things:

- Original, helpful content: Does it solve a problem that isn’t already solved by 1,000 other sites?

- Trust signals: Do you have an About page, Contact info, and a clear Privacy Policy?

- No thin or deceptive pages: AdSense rejects “thin” content (300-word fluff pieces), whether written by AI or humans.

Using AI tools safely as a blogger:

I use AI for outlines, idea expansion, and clarity improvements. Then I rewrite. Every time. AI speeds me up; it doesn’t replace my judgment.

Should You Care About AI Content Detectors at All?

Yes—but only as a rough indicator. If a detector flags your work as 90% AI, it might mean your writing is a bit too dry or generic. Use it as a signal to “humanize” your tone, add some grit, and inject your personality.

In 2026, no AI detector decides your future. Your readers do.